Some time ago Konrad Rzeszutek Wilk (the Xen Linux maintainer) came up with a list of possible improvements to the Xen PV block protocol, which is used by Xen guests to reduce the overhead of emulated disks.

This document is quite long, and the list of possible improvements is also not small, so we decided to go implementing them step by step. One of the first items that seemed like a good candidate for performance improvement was what is called “indirect descriptors”. This idea is borrowed from the VirtIO protocol, and to put it clear consists on fitting more data in a request. I am going to expand how is this done later in the blog post, but first I would like to speak a little bit about the Xen PV disk protocol in order to understand it better.

Xen PV disk protocol

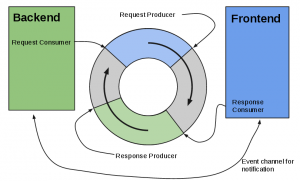

The Xen PV disk protocol is very simple, it only requires a shared memory page between the guest and the driver domain in order to queue requests and read responses. This shared memory page has a size of 4KB, and is mapped by both the guest and the driver domain. The structure used by the fronted and the backend to exchange data is also very simple, and is usually known as a ring, which is a circular queue and some counters pointing to the last produced requests and responses.

The maximum number of requests and responses in a ring is calculated dividing the size of shared memory (minus the indexes) by the maximum size of a request and then rounding the result to the closer minor power of 2, so in our case the maximum number of requests in a ring is 32 ((4096 – 64)/112 = 36).

Now that we know how many requests we can fit on a ring we have to examine the structure that is used to encapsulate a request:

struct blkif_request {

uint8_t operation;

uint8_t nr_segments;

blkif_vdev_t handle;

uint64_t id;

blkif_sector_t sector_number;

struct blkif_request_segment {

grant_ref_t gref;

uint8_t first_sect, last_sect;

} seg[11];

};

I’ve simplified a little bit the structure, there are several fields used to identify and track the request, but the one with the meat is “seg”, and it’s the most interesting one because that’s were the data that is read/written from the disk is stored. Each segment contains a reference to a grant page, that’s a shared memory page that is used to transfer data between blkfront and blkback. If we have 11 segments in a request, and each segment contains a reference of a memory page that has a size of 4KB we can only send 44KB worth of data in each request (4KB * 11 = 44KB). If then we take into account that a ring has a limit of 32 requests in flight at the same time, we can easily calculate that the maximum outstanding IO in a ring is 1408KB (44KB * 32 = 1408KB), and frankly that’s really not much, most modern disks can consume this amount of data very fast, putting the bottleneck on the PV protocol itself.

In order to overcome this limitation there were several approaches, one by Intel that consisted in creating another shared ring that only contained segments, which allowed us to increase the maximum data in a request to 4MB. There was also another one by Citrix that expanded the size of the shared ring by using multiple pages, so the maximum number of in-flight requests could be increased. Both of this proposals were focused on expanding the maximum outstanding IO, but none of them was merged upstream. The effect of indirect descriptors is very similar to Intel’s approach, since it focuses on increasing the maximum amount of data a request can handle, pushing it even further than 4MB.

Xen indirect descriptors implementation

In order to overcome that limitation Konrad suggested using something similar to VirtIO indirect descriptors, and I frankly think it’s the best solution because it allows us to scale the maximum amount of data a request can contain, and modern storage devices tend to like big requests. Indirect descriptors introduce a new request type, that’s used only by read or write operations, and places all the segments inside of shared memory pages instead of placing them directly on the request. The structure of a indirect request is as follows:

struct blkif_request_indirect {

uint8_t operation;

uint8_t indirect_op;

uint16_t nr_segments;

uint64_t id;

blkif_sector_t sector_number;

blkif_vdev_t handle;

uint16_t _pad2;

grant_ref_t indirect_grefs[8];

};

What we have done here is mainly replace the “seg” array with an array of grant references, each of which is a shared memory page filled by a bunch of structures looking as follows:

struct blkif_request_segment_aligned {

grant_ref_t gref;

uint8_t first_sect, last_sect;

uint16_t _pad;

};

Each of this structures has a size of 8bytes, so we can put 512 segments (4096 / 8 = 512) inside each shared page. Thus, considering that we can fit references to 8 shared memory pages, this gives us a maximum total of 4096 segments per request. Taking into account that each segment contains a reference to a memory page we can issue requests with 16MB worth of data, which is quite a lot compared to the previous 44KB. This also implies that we can have 512MB worth of outstanding data in a full ring when using all the indirect segments.

This is obviously a lot of data, so right now in Linux blkback the maximum number of segments per requests is set to 256, and in Linux blkfront the default value is 32 in order to provide a good balance between memory usage and disk throughput. This default number of segments can be tuned with a boot time option, so users have the choice of expanding the number of indirect segments in a request without much fuss, simply add the following option to your guest boot line in order to increase the default number of segments:

xen_blkfront.max=64

This will increase the number of segments to 64 instead of 32. There is not rule of thumb to know the number of segments that will perform better with your storage, so I recommend that you play a little bit with it in order to find the value that delivers the best throughput for your use case.

Indirect descriptors for Xen PV block protocol was merged into the 3.11 Linux kernel, but remember that in order to try it you need to use a kernel with indirect descriptors support in both the guest and the driver domain! If you are unsure if your backend supports indirect descriptors you just have to search for the entry “feature-max-indirect-segments” in the backend xenstore folder related to your PV disk.